Facebook (now known as Meta) has reportedly proposed to the Wikimedia Foundation a new study plan to use AI to improve the accuracy of articles in Wikipedia’s left-handed “encyclopedia.”

Digital Trends recently announced that Facebook, in collaboration with the Wikimedia Foundation, has developed a machine learning model that, in collaboration with the Wikimedia Foundation, can automatically scan hundreds of thousands of citations at once across Wikipedia to determine if they support the relevant claims. precision. Web site.

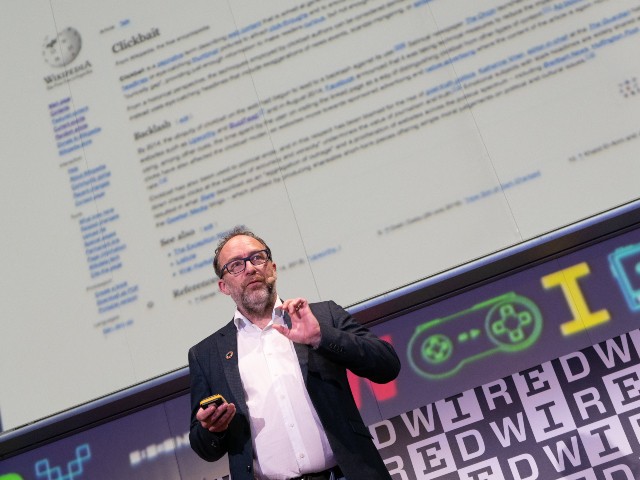

Jimmy Wales presents Wikipedia (Rosdiana Ciaravolo/Getty)

Mark Zuckerberg throws Javelin (Mark Zuckerberg/Facebook)

Fabio Petroni, Chief Technical Manager of FAIR (Fundamental Research AI) of the Meta AI team, said in a statement: “I think at the end of the day we are driven by curiosity. We want to see what the limits of this technology are. we are not really sure [this AI] can do something meaningful in this context. No one has ever tried to do such a thing [before]”.

The machine learning model used was trained on a dataset of 4 million Wikipedia citations. The new tool can efficiently analyze information associated with a citation and then correlate it with supporting evidence.

Petroni commented: “There is a component [looking at] lexical similarity between phrase and source, but this is an easy case. Using these models, we create an index by segmenting all these web pages and providing a complete representation for each passage… This is the meaning of the passage, not the literal representation of the passage. This means that two pieces of text with the same meaning will be displayed very closely in the n-dimensional space, which turns out to be where all these passages are stored.

While the tool can detect fake citations, it can also suggest better links for existing citations. However, Petroni said there is still a lot of work to be done before the tool can be used. “What we’re doing is a proof of concept. Not very convenient right now. For this to work you need a new index that indexes more data than we currently have. It should be constantly updated and new information should come every day.

Breitbart News often reports on Wikipedia’s left-wing bias. Recently, the site’s editors feverishly edited an article on the recession to better reflect the Biden administration’s message.

Breitbart News correspondent Allum Bokhari wrote:

The editors of the left-leaning online encyclopedia have defined “recession” unusually broadly and support the Biden administration’s claim that it is not a recession. The National Bureau of Economic Research (NBER) definition states that a recession is “a significant decline in market-wide economic activity lasting more than a few months.”

What has until recently been the general consensus on the definition of a recession – two quarters of negative GDP growth in a row – is at the top of the page, but the editors are trying to remove it. This definition is also referred to as the UK definition.

The article continues in the definition section, “In a 1975 New York Times article, economic statistician Julius Shiskin proposed a few ground rules for defining a recession, namely GDP.”

Read more about Digital Trends here.

Source: Breitbart